Section Two: How We Test Creative Now

How We Have Been Testing Creative Until Now

When testing creative we typically would test three to six videos along with a control video using Facebook’s split test feature. We would show these ads to broad or 5-10% LALs (Lookalike) audiences, and restrict distribution to the Facebook newsfeed only, Android only and we would use mobile app install bidding (MAI) to get about 100-250 installs.

If one of those new “challenger” ads beat the control video’s IPM or came within 10%-15% of its performance, we would launch those potential new winning videos into the ad sets with the control video and let them fight it out to generate ROAS.

We have seen hints of what we’re about to describe across numerous ad accounts and have confirmed with 7-figure spending advertisers that they have seen the same thing. But for purposes of explanation, let us focus on one client of ours and how their ads performed in creative tests.

In November and December 2019, we produced +60 new video concepts for this client. All of them failed to beat the control video’s IPM. This struck us as odd, and it was statistically impossible. We expected to generate a new winner 5% of the time or 1 out of 20 videos – so 3 winners. Since we felt confident in our creative ideas, we decided to look deeper into our testing methods.

The traditional testing methodology includes the idea of testing a testing system or an A/A test. A/A tests are like A/B tests, but instead of testing multiple creatives, you test the same creative in each “slot” of the test.

If your testing system/platform is working as expected, all “variations”, should produce similar results assuming you get close to statistical significance. If your A/A test results are very different, and the testing platform/methodology concludes that one variation or another significantly outperforms or underperforms compared to the other variations, there could be an issue with the testing method or quantity of data gathered.

First A/A test of video creative

Here is how we set up an A/A test to validate our non-standard approach to Facebook testing. The purpose of this test was to understand if Facebook maintains a creative history for the control and thus gives the control a performance boost making it very difficult to beat – if you do not allow it to exit the learning phase and reach statistical relevance.

We copied the control video four times and added one black pixel in different locations in each of the new “variations.” This allowed us to run what would look like the same video to humans but would be different videos in the eyes of the testing platform. The goal was to get Facebook to assign new hash IDs for each cloned video and then test them all together and observe their IPMs.

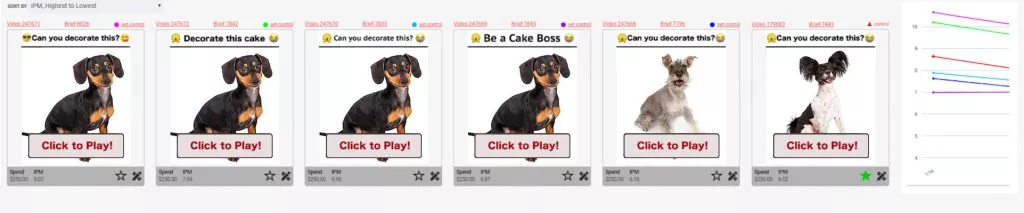

These are the ads we ran… except we did not run the dachshund; I have replaced the actual ads with cute dogs to avoid disclosing the advertiser’s identity. IPMs for each ad in the far right of the image.

Things to note here:

The far-right ad (in the blue square) is the control.

All the other ads are clones of the control with one black pixel added.

The far-left ad/clone outperformed the control by 149%. As described earlier, a difference like that should not happen. If the platform was truly variation agnostic, BUT – to save money, we did not follow best practices to allow the ad set(s) to exit the learning phase.

We ran this test for only 100 installs. Which is, our standard operating procedure for creative testing.

Once we completed our first test to 100 installs, we paused the campaign to analyze the results. Then we turned the campaign back on to scale up to 500 installs to get closer to statistical significance. We wanted to see if more data would result in IPM normalization (in other words if the test results would settle back down to more even performance across the variations). However, the results of the second test remained the same. Note: the ad set(s) did not exit the learning phase and we did not follow Facebook’s best practice.

The results of this first test, while not statistically significant, were surprisingly enough to merit additional tests. So, we tested on!

Second A/A test of video creative

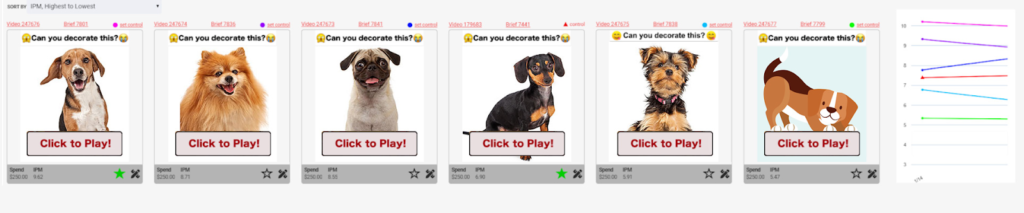

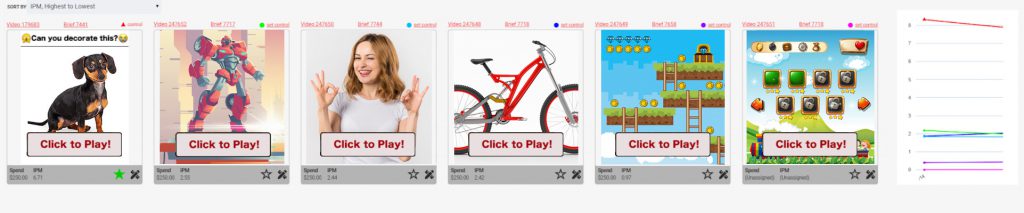

For our second test, we ran the six videos shown below. Four of them were controls with different headers; two of them were new concepts that were very similar to the control. Again, we did not run the dachshund; they have been inserted to protect the advertiser’s identity and to offer you cuteness!

The IPMs for all ads ranged between 7-11 – even the new ads that did not share a thumbnail with the control. IPMs for each ad in the far right of the image.

Third A/A test of video creative

Next, we tested six videos: one control and five visually similar variations to the control but one very different from a human. IPMs ranged between 5-10. IPMs for each ad in the far right of the image.

Fourth A/A test of video creative

This was when we had our “ah-ha!” moment. We tested six very different video concepts: the one control video and five brand new ideas, all of which were visually very different from the control video and did not share the same thumbnail.

The control’s IPM was consistent in the 8-9 range, but the IPMs for the new visual concepts ranged between 0-2. IPMs for each ad in the far right of the image.

Here are our impressions from the above tests

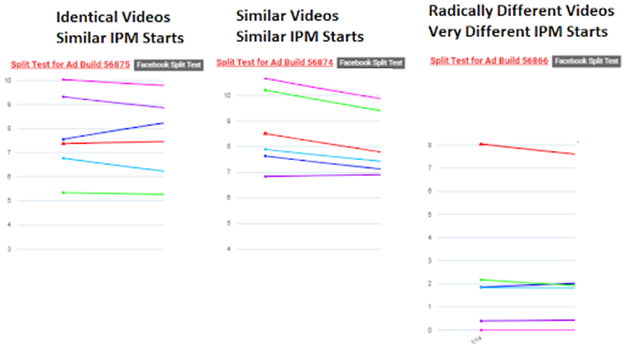

Facebook’s split-tests maintains creative history for the control video. This gives the control advantage with our non-statistically relevant, non-standard best practice of IPM testing.

We are unclear if Facebook can group variations with a similar look and feel to the control. If it can, similar-looking ads could also start with a higher IPM based on influence from the control — or perhaps similar thumbnails influence non-statistically relevant IPM.

Creative concepts that are visually very different from the control appear to not share a creative history. IPMs for these variations are independent of the control.

It appears that new, “out of the box” visual concepts versus the control may require more impressions to quantify their performance.

Our IPM testing methodology appears to be valid if we do NOT use a control video as the benchmark for winning.

IMP Testing Summary

Here are the line graphs from the second, third, and fourth tests.

In the next section, we will explain what we think all this means. And, how to work with the implications of these findings in your campaigns.